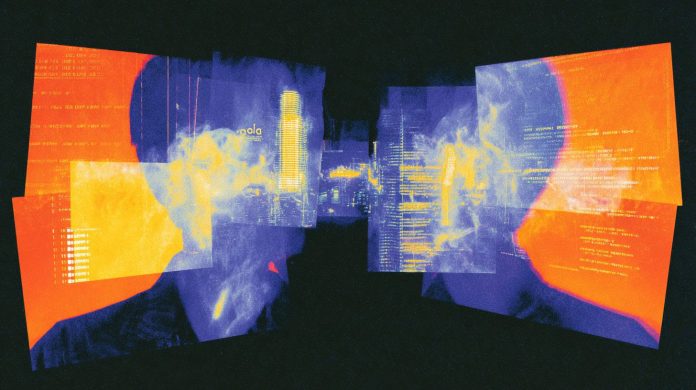

Major artificial intelligence companies are escalating concerns over what they describe as aggressive attempts by rival firms to replicate proprietary AI systems through indirect extraction methods.

OpenAI has formally alerted U.S. lawmakers to what it characterizes as improper use of its models by Chinese artificial intelligence developers, specifically naming DeepSeek. In a memo circulated to policymakers, the company alleged that DeepSeek is leveraging advanced “distillation” strategies to replicate the functional strengths of OpenAI’s systems without direct authorization.

Claims of “Free-Riding” Through Distillation

According to the memo, OpenAI contends that DeepSeek is employing distillation techniques in ways that effectively allow it to “free-ride” on the technological investments made by U.S. frontier AI laboratories. Distillation, a widely used training method in artificial intelligence, involves transferring knowledge from a large, powerful model into a smaller, more efficient system. While the technique itself is not inherently improper, OpenAI argues that the context and execution matter.

The company stated that certain Chinese large language model providers, along with affiliated university research institutions, are interacting with OpenAI systems in a manner that could significantly accelerate the development of competing models. By systematically querying OpenAI’s models and analyzing responses, OpenAI believes these actors are extracting insights that help them build comparable systems.

OpenAI further claimed it has detected activity linked to accounts associated with DeepSeek personnel. These accounts allegedly used techniques designed to bypass access limitations and usage safeguards embedded within OpenAI’s infrastructure.

Safeguards Tightened Amid Escalating Tactics

OpenAI acknowledged that it has implemented technical barriers aimed at limiting the misuse of its outputs for model cloning. However, the company warned that the strategies employed by external actors are evolving.

In its memo, OpenAI argued that as defensive mechanisms become more sophisticated, so too do the extraction tactics used by competitors. The firm described certain methods as “obfuscated,” suggesting deliberate attempts to conceal how its systems are being leveraged.

Although distillation remains a standard and legitimate practice within the AI research community, OpenAI emphasized that the covert application of the technique to reconstruct frontier-level systems raises serious concerns. The company warned that models built through such processes may lack the critical guardrails and safety alignment mechanisms embedded in original systems. According to OpenAI, this gap could result in “dangerous outputs in high-risk domains,” particularly in sensitive areas such as cybersecurity, biosecurity, and misinformation.

The company clarified that it supports responsible and transparent applications of distillation. “There are legitimate use cases for distillation,” OpenAI noted in its communication to lawmakers. “However, we do not permit our outputs to be used to construct imitation frontier AI models that replicate our core capabilities.”

Google Flags Similar Threats to Gemini

The concerns are not isolated to OpenAI. On the same day, Google’s Threat Intelligence Group released findings indicating a surge in what it described as commercially driven attempts to extract knowledge from its flagship AI model, Gemini.

In its report, Google detailed a pattern of so-called “distillation attacks,” in which actors repeatedly prompt Gemini thousands of times. The objective, according to the company, is to analyze output patterns and internal logic in order to strengthen rival AI systems.

While Google did not publicly identify specific organizations or countries involved, it stated that it had “observed and mitigated frequent model extraction attacks” originating from private sector entities worldwide. The company also referenced academic researchers who it claims have attempted to reverse engineer proprietary model behaviors.

Rising Geopolitical Stakes in AI Competition

The developments come amid intensifying global competition in artificial intelligence, particularly between U.S. and Chinese firms racing to dominate generative AI infrastructure. As governments weigh regulatory frameworks and export controls, major AI developers are increasingly framing model extraction and distillation misuse as both an intellectual property and national security concern.

Industry analysts note that the line between legitimate research and unauthorized model replication remains complex. Distillation has long been an accepted efficiency technique in machine learning workflows. However, when conducted at scale and without explicit authorization, companies argue it effectively undermines years of proprietary research and billions of dollars in capital investment.

Both OpenAI and Google appear to be signaling that they expect stronger policy intervention and clearer enforcement mechanisms to deter unauthorized model cloning. As frontier AI capabilities expand, the stakes surrounding intellectual property protection, safety alignment, and international AI governance continue to rise.